Introduction

The Web

Catalysts for Human Progress

Since the dawn of mankind, biological evolution has shaped us into social creatures. The social capabilities of humans are however much more evolved than most other species. For example, humans are one of the only animals that have clearly visible eye whites. This allows people to see what other people are looking at, which simplifies collaborative tasks. Furthermore, theory of mind, the ability to understand that others have different perspectives, is much more pronounced in humans than in other animals, which also strengthens our ability to collaborate. While our collaborative capabilities were initially limited to physical tasks, the adoption of language and writing allowed us to share knowledge with each other.

Methods for sharing knowledge are essential catalysts for human progress, as shared knowledge allows larger groups of people to share goals and accomplish tasks that would have been impossible otherwise. Due to our technological progress, the bandwidth of these methods for sharing knowledge is always growing broader, which is continuously increasing the rate of human and technological progress.

Throughout the last centuries, we saw three major revolutions in bandwidth. First, the invention of the printing press in the 15th century drastically increased rate at which books could be duplicated. Second, there was the invention of radio and television in the 20th century. As audio and video are cognitively less demanding than reading, this lowered the barrier for spreading knowledge even further. Third, we had the development of the internet near the end of the 20th century, and the invention of the World Wide Web in 1989 on top of that, which gave us a globally interlinked information space. Like the inventions before, the Web is fully open and decentralized, where anyone can say anything about anything. With the Web, bandwidth for knowledge sharing has become nearly unlimited, as knowledge no longer has to go through a few large radio or tv stations, but can now be shared over a virtually unlimited amount of Web pages, which leads to a more social human species.

Impact of the Web

At the time of writing, the Web is 30 years old. Considering our species is believed to be 300,000 years old, this is just 0.01% of the time we have been around. To put this in perspective in terms of a human life, the Web would only be a baby of just under 3 days old, assuming a life expectancy of 80 years. This means that the Web just got started, and it will take a long time for it to mature and to achieve its full potential.

Even in this short amount of time, the Web has already transformed our world in an unprecedented way. Most importantly, it has given more than 56% of the global population access to most of all human knowledge behind a finger’s touch. Secondly, social media has enabled people to communicate with anyone on the planet near-instantly, and even with multiple people at the same time. Furthermore, it has impacted politics and even caused oppressive regimes to be overthrown. Next to that, it is also significantly disrupting businesses models that have been around since the industrial revolution, and creating new ones.

Knowledge Graphs

The Web has made a positive significant impact on the world. Yet, the goal of curiosity-driven researchers is to uncover what the next steps are to improve the world even more.

In 2001, Tim Berners-Lee shared his dream [1] where machines would be able to help out with our day-to-day tasks by analyzing data on the Web and acting as intelligent agents. Back then, the primary goal of the Web was to be human-readable. In order for this dream to become a reality, the Web had to become machine-readable. This Web extension is typically referred to as the Semantic Web.

Now, almost twenty years later, several standards and technologies have been developed to make this dream a reality. In 2013, more than four million Web domains were already using these technologies. Using these Semantic Web technologies, so-called knowledge graphs are being constructed by many major companies world-wide, such as Google and Microsoft. A knowledge graph [2] is a collection of structured information that is organized in a graph. These knowledge graphs are being used to support tasks that were part of Tim Berners-Lee’s original vision, such as managing day-to-day tasks with the Google Now assistant.

The standard for modeling knowledge graphs is the Resource Description Framework (RDF) [3].

Fundamentally, it is based around the concept of triples that are used to make statements about things.

A triple is made up of a subject, predicate and object,

where the subject and object are resources (or things), and the predicate denotes their relationship.

For example, Fig. 1 shows a simple triple indicating the nationality of a person.

Multiple resources can be combined with each other through multiple triples, which forms a graph.

Fig. 2 shows an example of such a graph, which contains knowledge about a person.

In order to look up information within such graphs, the SPARQL query language [4]

was introduced as a standard.

Essentially, SPARQL allows RDF data to be looked up through combinations of triple patterns,

which are triples where any of its elements can be replaced with variables such as ?name.

For example, Listing 1 contains a SPARQL query that finds the names of all people that Alice knows.

Fig. 1: A triple indicating that Alice knows Bob.

Fig. 2: A small knowledge graph about Alice.

SELECT ?name WHERE {Alice knows ?person.?person name ?name.}

Listing 1: A SPARQL query selecting the names of all people that Alice knows.

The single result of this query would be "Bob".

Full URIs are omitted in this example.

Evolving Knowledge Graphs

Within Big Data, we talk about the four V’s: volume, velocity, variety and veracity. As the Web meets these three requirements, it can be seen as a global Big Dataset. Specifically, the Web is highly volatile, as it is continuously evolving, and it does so at an increasing rate. For example, Google is processing more than 40,000 search requests every second, 500 hours of video are being uploaded to YouTube every minute, and more than 5,000 tweets are being sent every second.

A lot of research and engineering work is needed to make it possible to handle this evolving data. For instance, it should be possible to store all of this data as fast as possible, and to make it searchable for knowledge as soon as possible. This is important, as there is a lot of value in evolving knowledge. For example, by tracking the evolution of biomedical information, the spread of diseases can be reduced, and by observing highway sensors, traffic jams may be avoided by preemptively rerouting traffic.

Due to the (RDF) knowledge graph model currently being atemporal, the usage of evolving knowledge graphs remains limited. As such, research and engineering effort is needed for new models, storage techniques, and query algorithms for evolving knowledge graphs. That is why evolving knowledge graphs are the main focus of my research.

Decentralized Knowledge Graphs

As stated by Tim Berners-Lee, the Web is for everyone. This means that the Web is a free platform (as in freedom, not free beer), where anyone can say anything about anything, and anyone can access anything that has been said. This is directly compatible with Article 19 of the Universal Declaration of Human Rights, which says the following:

Everyone has the right to freedom of opinion and expression; this right includes freedom to hold opinions without interference and to seek, receive and impart information and ideas through any media and regardless of frontiers.

The original Web standards and technologies have been designed with this fundamental right in mind. However, over the recent years, the Web has been growing towards more centralized entities, where this right is being challenged.

The current centralized knowledge graphs do not match well with the original decentralized nature of the Web. At the time of writing, these new knowledge graphs are in the hands of a few large corporations, and intelligent agents on top of them are restricted to what these corporations allow them to do. As people depend on the capabilities of these knowledge graphs, large corporations gain significant control over the Web. In the last couple of years, these centralized powers have proven to be problematic, for example when the flow of information is redirected to influence election results, when personal information is being misused, or when information is being censored due to idealogical differences. This shows that our freedom of expression is being challenged by these large centralized entities, as there is clear interference of opinions through redirection of the information flow, and obstruction to receive information through censorship.

For these reasons, there is a massive push for re-decentralizing the Web, where people regain ownership of their data. Decentralization is however a technologically difficult thing, as applications typically require a single centralized entrypoint from which data is retrieved, and no such single entrypoint exist in a truly decentralized environment. As people do want ownership of their data, they do not want to give up their intelligent agents. As such, this decentralization wave requires significant research effort to achieve the same capabilities as these centralized knowledge graphs, which is why this is an important factor within my research. Specifically, I focus on supporting knowledge graphs on the Web, instead of only being available behind closed doors, so that they are available for everyone.

Research Question

The goal of my research is to allow people to publish and find knowledge without having to depend on large centralized entities, with a focus on knowledge that evolves over time. This lead me to the following research question for my PhD:

How to store and query evolving knowledge graphs on the Web?

During my research, I focus on four main challenges related to this research question:

- Experimentation requires representative evolving data.

In order to evaluate the performance of systems that handle evolving knowledge graphs, a flexible method for obtaining such data needs to be available. - Indexing evolving data involves a trade-off between storage efficiency and lookup efficiency.

Indexing techniques are used to improve the efficiency of querying, but comes at the cost of increased storage space and preprocessing time. As such, it is important to find a good balance between the amount of storage space with its indexing time, and the amount of querying speedup, so that evolving data can be stored in a Web-friendly way. - Web interfaces are highly heterogeneous.

Before knowledge graphs can be queried from the Web, different interfaces through which data are available, and different algorithms with which data can be retrieved need to be combinable. - Publishing evolving data via a queryable interface involves continuous updates to clients.

Centralized querying interfaces are hard to scale for an increasing number of concurrent clients, especially when the knowledge graphs that are being queried over are continuously evolving, and clients need to be notified of data updates continuously. New kinds of interfaces and querying algorithms are needed to cope with this scalability issue.

Outline

Corresponding to my four research challenges, this thesis bundles the following four peer-reviewed publications as separate chapters, for which I am the lead author:

- Ruben Taelman et al. Generating Public Transport Data based on Population Distributions for RDF Benchmarking.

In: In Semantic Web Journal. IOS Press, 2019. - Ruben Taelman et al. Triple Storage for Random-Access Versioned Querying of RDF Archives.

In: Journal of Web Semantics. Elsevier, 2019. - Ruben Taelman et al. Comunica: a Modular SPARQL Query Engine for the Web.

In: International Semantic Web Conference. Springer, October 2018. - Ruben Taelman et al. Continuous Client-side Query Evaluation over Dynamic Linked Data.

In: The Semantic Web: ESWC 2016 Satellite Events, Revised Selected Papers. Springer, May 2016.

In Chapter 2, a mimicking algorithm (PoDiGG) is introduced for generating realistic evolving public transport data, so that it can be used to benchmark systems that work with evolving data. This algorithm is based on established concepts for designing public transport networks, and takes into account population distributions for simulating the flow of vehicles. Next, in Chapter 3, a storage architecture and querying algorithms are introduced for managing evolving data. It has been implemented as a system called OSTRICH, and extensive experimentation shows that this system introduces a useful trade-off between storage size and querying efficiency for publishing evolving knowledge graphs on the Web. In Chapter 4, a modular query engine called Comunica is introduced that is able to cope with the heterogeneity of data on the Web. This engine has been designed to be highly flexible, so that it simplifies research within the query domain, where new query algorithms can for example be developed in a separate module, and plugged into the engine without much effort. In Chapter 5, a low-cost publishing interface and accompanying querying algorithm (TPF Query Streamer) is introduced and evaluated to enable continuous querying of evolving data with a low volatility. Finally, this work is concluded in Chapter 6 and future research opportunities are discussed.

Publications

This section provides a chronological overview of all my publications to international conferences and scientific journals that I worked on during my PhD. For each of them, I briefly explain their relationship to this dissertation.

2016

- Ruben Taelman, Ruben Verborgh, Pieter Colpaert, Erik Mannens, Rik Van de Walle. Continuously Updating Query Results over Real-Time Linked Data. Published in proceedings of the 2nd Workshop on Managing the Evolution and Preservation of the Data Web. CEUR-WS, May 2016.

Workshop paper that received the best paper award at MEPDaW 2016. Because of this, an extended version was published as well: “Continuous Client-Side Query Evaluation over Dynamic Linked Data”. - Ruben Taelman. Continuously Self-Updating Query Results over Dynamic Heterogeneous Linked Data. Published in The Semantic Web. Latest Advances and New Domains: 13th International Conference, ESWC 2016, Heraklion, Crete, Greece, May 29 – June 2, 2016.

PhD Symposium paper in which I outlined the goals of my PhD. - Ruben Taelman, Ruben Verborgh, Pieter Colpaert, Erik Mannens, Rik Van de Walle. Moving Real-Time Linked Data Query Evaluation to the Client. Published in proceedings of the 13th Extended Semantic Web Conference: Posters and Demos.

Accompanying poster of “Continuously Updating Query Results over Real-Time Linked Data”. - Ruben Taelman, Ruben Verborgh, Pieter Colpaert, Erik Mannens. Continuous Client-Side Query Evaluation over Dynamic Linked Data. Published in The Semantic Web: ESWC 2016 Satellite Events, Heraklion, Crete, Greece, May 29 – June 2, 2016, Revised Selected Papers.

Extended version of “Continuously Updating Query Results over Real-Time Linked Data”. Included in this dissertation as Chapter 5 - Ruben Taelman, Pieter Colpaert, Ruben Verborgh, Pieter Colpaert, Erik Mannens. Multidimensional Interfaces for Selecting Data within Ordinal Ranges. Published in proceedings of the 7th International Workshop on Consuming Linked Data.

Introduction of an index-based server interface for publishing ordinal data. I worked on this due to the need of a generic interface for exposing data with some kind of order (such as temporal data), which I identified in the article “Continuously Updating Query Results over Real-Time Linked Data”. - Ruben Taelman, Pieter Heyvaert, Ruben Verborgh, Erik Mannens. Querying Dynamic Datasources with Continuously Mapped Sensor Data. Published in proceedings of the 15th International Semantic Web Conference: Posters and Demos.

Demonstration of the system introduced in “Continuously Updating Query Results over Real-Time Linked Data”, when applied to a thermometer that continuously produces raw values. - Pieter Heyvaert, Ruben Taelman, Ruben Verborgh, Erik Mannens. Linked Sensor Data Generation using Queryable RML Mappings. Published in proceedings of the 15th International Semantic Web Conference: Posters and Demos.

Counterpart of the demonstration “Querying Dynamic Datasources with Continuously Mapped Sensor Data” that focuses on explaining the mappings from raw values to RDF. - Ruben Taelman, Ruben Verborgh, Erik Mannens. Exposing RDF Archives using Triple Pattern Fragments. Published in proceedings of the 20th International Conference on Knowledge Engineering and Knowledge Management: Posters and Demos.

Poster paper in which I outlined my future plans to focus on the publication and querying of evolving knowledge graphs through a low-cost server interface.

2017

- Anastasia Dimou, Pieter Heyvaert, Ruben Taelman, Ruben Verborgh. Modeling, Generating, and Publishing Knowledge as Linked Data. Published in Knowledge Engineering and Knowledge Management: EKAW 2016 Satellite Events, EKM and Drift-an-LOD, Bologna, Italy, November 19–23, 2016, Revised Selected Papers (2017).

Tutorial in which I presented techniques for publishing and querying knowledge graphs. - Ruben Taelman, Ruben Verborgh, Tom De Nies, Erik Mannens. PoDiGG: A Public Transport RDF Dataset Generator. Published in proceedings of the 26th International Conference Companion on World Wide Web (2017).

Demonstration of the PoDiGG system for which the journal paper “Generating Public Transport Data based on Population Distributions for RDF Benchmarking” was under submission at that point. - Ruben Taelman, Miel Vander Sande, Ruben Verborgh, Erik Mannens. Versioned Triple Pattern Fragments: A Low-cost Linked Data Interface Feature for Web Archives. Published in proceedings of the 3rd Workshop on Managing the Evolution and Preservation of the Data Web (2017).

Introduction of a low-cost server interface for publishing evolving knowledge graphs. - Ruben Taelman, Miel Vander Sande, Ruben Verborgh, Erik Mannens. Live Storage and Querying of Versioned Datasets on the Web. Published in proceedings of the 14th Extended Semantic Web Conference: Posters and Demos (2017).

Demonstration of the interface introduced in “Versioned Triple Pattern Fragments: A Low-cost Linked Data Interface Feature for Web Archives”. - Joachim Van Herwegen, Ruben Taelman, Sarven Capadisli, Ruben Verborgh. Describing configurations of software experiments as Linked Data. Published in proceedings of the First Workshop on Enabling Open Semantic Science (SemSci) (2017).

Introduction of techniques for semantically annotating software experiments. This work offered a basis for the article on “Comunica: a Modular SPARQL Query Engine for the Web” which was in progress by then. - Ruben Taelman, Ruben Verborgh. Declaratively Describing Responses of Hypermedia-Driven Web APIs. Published in proceedings of the 9th International Conference on Knowledge Capture (2017).

Theoretical work on offering techniques for distinguishing between different hypermedia controls. This need was identified when working on “Versioned Triple Pattern Fragments: A Low-cost Linked Data Interface Feature for Web Archives”.

2018

- Julián Andrés Rojas Meléndez, Brecht Van de Vyvere, Arne Gevaert, Ruben Taelman, Pieter Colpaert, Ruben Verborgh. A Preliminary Open Data Publishing Strategy for Live Data in Flanders. Published in proceedings of the 27th International Conference Companion on World Wide Web.

Comparison of pull-based and push-based strategies for querying evolving knowledge graphs within client-server environments. - Ruben Taelman, Miel Vander Sande, Ruben Verborgh. OSTRICH: Versioned Random-Access Triple Store. Published in proceedings of the 27th International Conference Companion on World Wide Web.

Demonstration of “Triple Storage for Random-Access Versioned Querying of RDF Archives” that was under submission at that point. - Ruben Taelman, Miel Vander Sande, Ruben Verborgh. Components.js: A Semantic Dependency Injection Framework. Published in proceedings of the The Web Conference: Developers Track.

Introduction of a dependency injection framework that was developed for “Comunica: a Modular SPARQL Query Engine for the Web”. - Ruben Taelman, Miel Vander Sande, Ruben Verborgh. Versioned Querying with OSTRICH and Comunica in MOCHA 2018. Published in proceedings of the 5th SemWebEval Challenge at ESWC 2018.

Experimentation on the combination of the systems from “Comunica: a Modular SPARQL Query Engine for the Web” and “Triple Storage for Random-Access Versioned Querying of RDF Archives”, which both were under submission at that point. - Ruben Taelman, Pieter Colpaert, Erik Mannens, Ruben Verborgh. Generating Public Transport Data based on Population Distributions for RDF Benchmarking. Published in Semantic Web Journal.

Journal publication on PoDiGG. Included in this dissertation as Chapter 2. - Ruben Taelman, Miel Vander Sande, Joachim Van Herwegen, Erik Mannens, Ruben Verborgh. Triple Storage for Random-Access Versioned Querying of RDF Archives. Published in Journal of Web Semantics.

Journal publication on OSTRICH. Included in this dissertation as Chapter 3. - Ruben Taelman, Riccardo Tommasini, Joachim Van Herwegen, Miel Vander Sande, Emanuele Della Valle, Ruben Verborgh. On the Semantics of TPF-QS towards Publishing and Querying RDF Streams at Web-scale. Published in proceedings of the 14th International Conference on Semantic Systems.

Follow-up work on “Continuous Client-Side Query Evaluation over Dynamic Linked Data”, in which more extensive benchmarking was done, and formalisations were introduced. - Ruben Taelman, Hideaki Takeda, Miel Vander Sande, Ruben Verborgh. The Fundamentals of Semantic Versioned Querying. Published in proceedings of the 12th International Workshop on Scalable Semantic Web Knowledge Base Systems co-located with 17th International Semantic Web Conference.

Introduction of formalisations for performing semantic versioned queries. This was identified as a need when working on “Triple Storage for Random-Access Versioned Querying of RDF Archives”. - Joachim Van Herwegen, Ruben Taelman, Miel Vander Sande, Ruben Verborgh. Demonstration of Comunica, a Web framework for querying heterogeneous Linked Data interfaces. Published in proceedings of the 17th International Semantic Web Conference: Posters and Demos.

Demonstration of “Comunica: a Modular SPARQL Query Engine for the Web”. - Ruben Taelman, Miel Vander Sande, Ruben Verborgh. GraphQL-LD: Linked Data Querying with GraphQL. Published in proceedings of the 17th International Semantic Web Conference: Posters and Demos.

Demonstration of a GraphQL-based query language, as a developer-friendly alternative to SPARQL. - Ruben Taelman, Joachim Van Herwegen, Miel Vander Sande, Ruben Verborgh. Comunica: a Modular SPARQL Query Engine for the Web. Published in proceedings of the 17th International Semantic Web Conference.

Journal publication on Comunica. Included in this dissertation as Chapter 4.

2019

- Brecht Van de Vyvere, Ruben Taelman, Pieter Colpaert, Ruben Verborgh. Using an existing website as a queryable low-cost LOD publishing interface. Published in proceedings of the 16th Extended Semantic Web Conference: Posters and Demos (2019).

Extension of “Comunica: a Modular SPARQL Query Engine for the Web” to query over semantically annotated paginated websites. - Ruben Taelman, Miel Vander Sande, Joachim Van Herwegen, Erik Mannens, Ruben Verborgh. Reflections on: Triple Storage for Random-Access Versioned Querying of RDF Archives. Published in proceedings of the 18th International Semantic Web Conference (2019).

Conference presentation on “Triple Storage for Random-Access Versioned Querying of RDF Archives”. - Miel Vander Sande, Sjors de Valk, Enno Meijers, Ruben Taelman, Herbert Van de Sompel, Ruben Verborgh. Discovering Data Sources in a Distributed Network of Heritage Information. Published in proceedings of the Posters and Demo Track of the 15th International Conference on Semantic Systems (2019).

Demonstration of an infrastructure to optimize federated querying over multiple sources, making use of “Comunica: a Modular SPARQL Query Engine for the Web” - Raf Buyle, Ruben Taelman, Katrien Mostaert, Geroen Joris, Erik Mannens, Ruben Verborgh, Tim Berners-Lee. Streamlining governmental processes by putting citizens in control of their personal data. Published in proceedings of the 6th International Conference on Electronic Governance and Open Society: Challenges in Eurasia (2019).

Reporting on a proof-of-concept within the Flemish government to use the decentralised Solid ecosystem for handling citizen data.

Generating Synthetic Evolving Data

In this chapter, we address the first challenge of this PhD, namely: “Experimentation requires realistic evolving data”. This challenge is a prerequisite to the next challenges, in which storage and querying techniques are introduced for evolving data. In order to evaluate the performance of storage and querying systems that handle evolving knowledge graphs, we must first have such knowledge graphs available to us. Ideally, real-world knowledge graphs should be used, as these can show the true performance of such systems in various circumstances. However, these real-world knowledge graphs have limited public availability, and do not allow for the required flexibility when evaluating systems. For example, the evaluation of storage systems can require the ingestion of evolving knowledge graphs of varying sizes, but real-world datasets only exist in fixed sizes.

To solve this problem, we focus on the generation of evolving knowledge graphs assuming that we have population distributions as input. For this, we started from the research question: “Can population distribution data be used to generate realistic synthetic public transport networks and scheduling?” Concretely, we introduce a mimicking algorithm for generating realistic synthetic evolving knowledge graphs with configurable sizes and properties. The algorithm is based on established concepts from the domain of public transport networks design, and takes population distributions as input to generate realistic transport networks. The algorithm has been implemented in a system called PoDiGG, and has been evaluated to measure its performance and level of realism.

Ruben Taelman, Pieter Colpaert, Erik Mannens, and Ruben Verborgh. 2019. Generating Public Transport Data based on Population Distributions for RDF Benchmarking. Semantic Web Journal 10, 2 (January 2019), 305–328.

Abstract

When benchmarking RDF data management systems such as public transport route planners, system evaluation needs to happen under various realistic circumstances, which requires a wide range of datasets with different properties. Real-world datasets are almost ideal, as they offer these realistic circumstances, but they are often hard to obtain and inflexible for testing. For these reasons, synthetic dataset generators are typically preferred over real-world datasets due to their intrinsic flexibility. Unfortunately, many synthetic datasets that are generated within benchmarks are insufficiently realistic, raising questions about the generalizability of benchmark results to real-world scenarios. In order to benchmark geospatial and temporal RDF data management systems, such as route planners, with sufficient external validity and depth, we designed PoDiGG, a highly configurable generation algorithm for synthetic public transport datasets with realistic geospatial and temporal characteristics comparable to those of their real-world variants. The algorithm is inspired by real-world public transit network design and scheduling methodologies. This article discusses the design and implementation of PoDiGG and validates the properties of its generated datasets. Our findings show that the generator achieves a sufficient level of realism, based on the existing coherence metric and new metrics we introduce specifically for the public transport domain. Thereby, PoDiGG provides a flexible foundation for benchmarking RDF data management systems with geospatial and temporal data.

Introduction

The Resource Description Framework (RDF) [3] and Linked Data [5] technologies enable distributed use and management of semantic data models. Datasets with an interoperable domain model can be stored and queried by different data owners in different ways. In order to discover the strengths and weaknesses of different storage and querying possibilities, data-driven benchmarks with different sizes of datasets and varying characteristics can be used.

Regardless of whether existing data-driven benchmarks use real or synthetic datasets, the external validity of their results can be too limited, which makes a generalization to other datasets difficult. Real datasets, on the one hand, are often only scarcely available for testing, and only cover very specific scenarios, such that not all aspects of systems can be assessed. Synthetic datasets, on the other hand, are typically generated by mimicking algorithms [6, 7, 8, 9], which are not always sufficiently realistic [10]. Features that are relevant for real-world datasets may not be tested. As such, conclusions drawn from existing benchmarks do not always apply to the envisioned real-world scenarios. One way to get the best of both worlds is to design mimicking algorithms that generate realistic synthetic datasets.

The public transport domain provides data with both geospatial and temporal properties, which makes this an especially interesting source of data for benchmarking. Its representation as Linked Data is valuable because 1) of the many shared entities, such as stops, routes and trips, across different existing datasets on the Web, 2) these entities can be distributed over different datasets and 3) benefit from interlinking for the improvement of discoverability. Synthetic public transport datasets are particularly important and needed in cases where public transport route planning algorithms are evaluated. The Linked Connections framework [11] and Connection Scan Algorithm [12] are examples of such public transport route planning systems. Because of the limited availability of real-world datasets with desired properties, these systems were evaluated with only a very low number of datasets, respectively one and three datasets. A synthetic public transport dataset generator would make it easier for researchers to include a higher number of realistic datasets with various properties in their evaluations, which would be beneficial to the discovery of new insights from the evaluations. Network size, network sparsity and temporal range are examples of such properties, and different combinations of them may not always be available in real datasets, which motivates the need for generating synthetic, but realistic datasets with these properties.

Not only are public transport datasets useful for benchmarking route planning systems, they are also highly useful for benchmarking geospatial [13, 14] and temporal [15, 16] RDF systems due to the intrinsic geospatial and temporal properties of public transport datasets. While synthetic dataset generators already exist in the geospatial and temporal domain [17, 18], no systems exist yet that focus on realism, and specifically look into the generation of public transport datasets. As such, the main topic that we address in this work, is solving the need for realistic public transport datasets with geospatial and temporal characteristics, so that they can be used to benchmark RDF data management and route planning systems. More specifically, we introduce a mimicking algorithm for generating realistic public transport data, which is the main contribution of this work.

We observed a significant correlation between transport networks and the population distributions of their geographical areas, which is why population distributions are the driving factor within our algorithm. The cause of this correlation is obvious, considering transport networks are frequently used to transport people, but other – possibly independent – factors exist that influence transport networks as well, like certain points of interest such as tourist attractions and shopping areas. Our algorithm is subdivided into five sequential steps, inspired by existing methodologies from the domains of public transit planning [19] as a means to improve the realism of the algorithm’s output data. These steps include the creation of a geospatial region, the placement of stops, edges and routes, and the scheduling of trips. We provide an implementation of this algorithm, with different parameters to configure the algorithm. Finally, we confirm the realism of datasets that are generated by this algorithm using the existing generic structuredness measure [10] and new measures that we introduce, which are specific to the public transport domain. The notable difference of this work compared to other synthetic dataset generators is that our generation algorithm specializes in generating public transit networks, while other generators either focus on other domains, or aim to be more general-purpose. Furthermore, our algorithm is based on population distributions and existing methodologies from public transit network design.

In the next section, we introduce the related work on dataset generation, followed by the background on public transit network design, and transit feed formats in Section 2.3. In Section 2.4, we introduce the main research question and hypothesis of this work. Next, our algorithm is presented in Section 2.5, followed by its implementation in Section 2.6. In Section 2.7, we present the evaluation of our implementation, followed by a discussion and conclusion in Section 2.8 and Section 2.9.

Public Transit Background

In this section, we present background on public transit planning that is essential to this work. We discuss existing public transit network planning methodologies and formats for exchanging transit feeds.

Public Transit Planning

The domain of public transit planning entails the design of public transit networks, rostering of crews, and all the required steps inbetween. The goal is to maximize the quality of service for passengers while minimizing the costs for the operator. Given a public demand and a topological area, this planning process aims to obtain routes, timetables and vehicle and crew assignment. A survey about 69 existing public transit planning approaches shows that these processes are typically subdivided into five sequential steps [19]:

- route design, the placement of transit routes over an existing network.

- frequencies setting, the temporal instantiation of routes based on the available vehicles and estimated demand.

- timetabling, the calculation of arrival and departure times at each stop based on estimated demand.

- vehicle scheduling, vehicle assignment to trips.

- crew scheduling and rostering, the assignment of drivers and additional crew to trips.

In this paper, we only consider the first three steps for our mimicking algorithm, which leads to all the required information that is of importance to passengers in a public transit schedule. We present the three steps from this survey in more detail hereafter.

The first step, route design, requires the topology of an area and public demand as input. This topology describes the network in an area, which contains possible stops and edges between these stops. Public demand is typically represented as origin-destination (OD) matrices, which contain the number of passengers willing to go from origin stops to destination stops. Given this input, routes are designed based on the following objectives [19]:

- area coverage: The percentage of public demand that can be served.

- route and trip directness: A metric that indicates how much the actual trips from passengers deviate from the shortest path.

- demand satisfaction: How many stops are close enough to all origin and destination points.

- total route length: The total distance of all routes, which is typically minimized by operators.

- operator-specific objectives: Any other constraints the operator has, for example the shape of the network.

- historical background: Existing routes may influence the new design.

The next step is the setting of frequencies, which is based on the routes from the previous step, public demand and vehicle availability. The main objectives in this step are based on the following measures [19]:

- demand satisfaction: How many stops are serviced frequently enough to avoid overcrowding and long waiting times.

- number of line runs: How many times each line is serviced – a trade-off between the operator’s aim for minimization and the public demand for maximization.

- waiting time bounds: Regulation may put restrictions on minimum and maximum waiting times between line runs.

- historical background: Existing frequencies may influence the new design.

The last important step for this work is timetabling, which takes the output from the previous steps as input, together with the public demand. The objectives for this step are the following:

- demand satisfaction: Total travel time for passengers should be minimized.

- transfer coordination: Transfers from one line to another at a certain stop should be taken into account during stop waiting times, including how many passengers are expected to transfer.

- fleet size: The total amount of available vehicles and their usage will influence the timetabling possibilities.

- historical background: Existing timetables may influence the new design.

Transit Feed Formats

The de-facto standard for public transport time schedules is the General Transit Feed Specification (GTFS). GTFS is an exchange format for transit feeds, using a series of CSV files contained in a zip file. The specification uses the following terminology to define the rules for a public transit system:

- Stop is a geospatial location where vehicles stop and passengers can get on or off, such as platform 3 in the train station of Brussels.

- Stop time indicates a scheduled arrival and departure time at a certain stop.

- Route is a time-independent collection of stops, describing the sequence of stops a certain vehicle follows in a certain public transit line. For example the train route from Brussels to Ghent.

- Trip is a collection of stops with their respective stop times, such as the route from Brussels to Ghent at a certain time.

The zip file is put online by a public transit operator, to be downloaded by route planning [24] software. Two models are commonly used to then extract these rules into a graph [25]. In a time-expanded model, a large graph is modeled with arrivals and departures as nodes and edges connect departures and arrivals together. The weights on these edges are constant. In a time-dependent model, a smaller graph is modeled in which vertices are physical stops and edges are transit connections between them. The weights on these edges change as a function of time. In both models, Dijkstra and Dijkstra-based algorithms can be used to calculate routes.

In contrast to these two models, the Connection Scan Algorithm [12] takes an ordered array representation of connections as input. A connection is the actual departure time at a stop and an arrival at the next stop. These connections can be given a IRI, and described using RDF, using the Linked Connections [11] ontology. For this base algorithm and its derivatives, a connection object is the smallest building block of a transit schedule.

In our work, generated public transport networks and time schedules can be serialized to both the GTFS format, and RDF datasets using the Linked Connections ontology.

Research Question

In order to generate public transport networks and schedules, we start from the hypothesis that both are correlated with the population distribution within the same area. More populated areas are expected to have more nearby and more frequent access to public transport, corresponding to the recurring demand satisfaction objective in public transit planning [19]. When we calculate the correlation between the distribution of stops in an area and its population distribution, we discover a positive correlation of 0.439 for Belgium and 0.459 for the Netherlands (p-values in both cases < 0.00001), thereby validating our hypothesis with a confidence of 99%. Because of the continuous population variable and the binary variable indicating whether or not there is a stop, the correlation is calculated using the point-biserial correlation coefficient [26]. For the calculation of these correlations, we ignored the population value outliers. Following this conclusion, our mimicking algorithm will use such population distributions as input, and derive public transport networks and trip instances.

The main objective of a mimicking algorithm is to create realistic data, so that it can be used to by benchmarks to evaluate systems under realistic circumstances. We will measure dataset realism in high-level by comparing the levels of structuredness of real-world datasets and their synthetic variants using the coherence metric introduced by Duan et al. [10]. Furthermore, we will measure the realism of different characteristics within public transport datasets, such as the location of stops, density of the network of stops, length of routes or the frequency of connections. We will quantify these aspects by measuring the distance of each aspect between real and synthetic datasets. These dataset characteristics will be linked with potential evaluation metrics within RDF data management systems, and tasks to evaluate them. This generic coherence metric together with domain-specific metrics will provide a way to evaluate dataset realism.

Based on this, we introduce the following research question for this work:

Can population distribution data be used to generate realistic synthetic public transport networks and scheduling?

We provide an answer to this question by first introducing an algorithm for generating public transport networks and their scheduling based on population distributions in Section 2.5. After that, we validate the realism of datasets that were generated using an implementation of this algorithm in Section 2.7.

Method

In order to formulate an answer to our research question, we designed a mimicking algorithm that generates realistic synthetic public transit feeds. We based it on techniques from the domains of public transit planning, spatiotemporal and RDF dataset generation. We reuse the route design, frequencies setting and timetabling steps from the domain public transit planning, but prepend this with a network generation phase.

Fig. 3 shows the model of the generated public transit feeds, with connections being the primary data element.

Fig. 3: The resources (rectangle), literals (dashed rectangle) and properties (arrows) used to model the generated public transport data. Node and text colors indicate vocabularies.

We consider different properties in this model based on the independent, intra-record or inter-record dependency rules [22], as discussed in Section 2.2. The arrival time in a connection can be represented as a fully intra-record dependency, because it depends on the time it departed and the stops it goes between. The departure time in a connection is both an intra-record and inter-record dependency, because it depends on the stop at which it departs, but also on the arrival time of the connection before it in the trip. Furthermore, the delay value can be seen as an inter-record dependency, because it is influenced by the delay value of the previous connection in the trip. Finally, the geospatial location of a stop depends on the location of its parent station, so this is also an inter-record dependency. All other unmentioned properties are independent.

In order to generate data based on these dependency rules, our algorithm is subdivided in five steps:

- Region: Creation of a two-dimensional area of cells annotated with population density information.

- Stops: Placement of stops in the area.

- Edges: Connecting stops using edges.

- Routes: Generation of routes between stops by combining edges.

- Trips: Scheduling of timely trips over routes by instantiating connections.

These steps are not fully sequential, since stop generation is partially executed before and after edge generation. The first three steps are required to generate a network, step 4 corresponds to the route design step in public transit planning and step 5 corresponds to both the frequencies setting and timetabling. These steps are explained in the following subsections.

Region

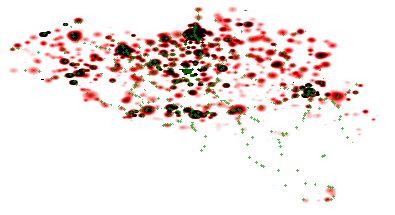

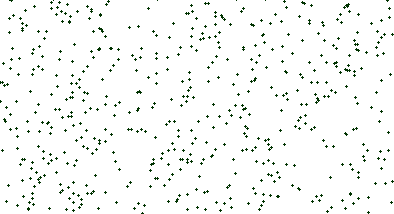

In order to create networks, we sample geographic regions in which such networks exist as two-dimensional matrices. The resolution is defined as a configurable number of cells per square of one latitude by one longitude. Network edges are then represented as links between these cells. Because our algorithm is population distribution-based, each cell contains a population density. These values can either be based on real population information from countries, or this can be generated based on certain statistical distributions. For the remainder of this paper, we will reuse the population distribution from Belgium as a running example, as illustrated in Fig. 4.

Fig. 4: Heatmap of the population distribution in Belgium, which is illustrated for each cell as a scale going from white (low), to red (medium) and black (high). The actual placement of train stops are indicated as green points.

Stops

Stop generation is divided into two steps. First, stops are placed based on population values, then the edge generation step is initiated after which the second phase of stop generation is executed where additional stops are created based on the generated edges.

Population-based For the initial placement of stops, our algorithm only takes a population distribution as input. The algorithm iteratively selects random cells in the two-dimensional area, and tags those cells as stops. To make it region-based [21], the selection uses a weighted Zipf-like-distribution, where cells with high population values have a higher chance of being picked than cells with lower values. The shape of this Zipf curve can be scaled to allow for different stop distributions to be configured. Furthermore, a minimum distance between stops can be configured, to avoid situations where all stops are placed in highly populated areas.

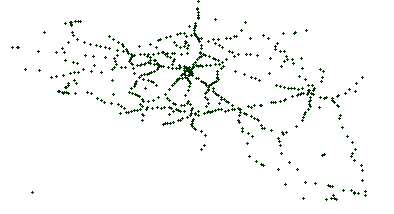

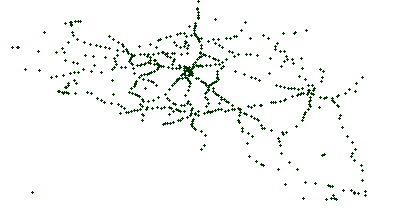

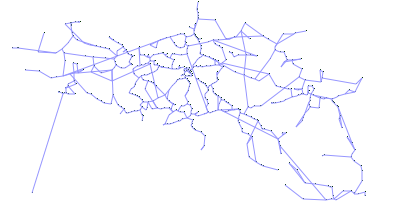

Edge-based Another stop generation phase exists after the edge generation because real transit networks typically show line artifacts for stop placement. Subfig. 5.1 shows the actual train stops in Belgium, which clearly shows line structures. Stop placement after the first generation phase results can be seen in Subfig. 5.2, which does not show these line structures. After the second stop generation phase, these line structures become more apparent as can be seen in Subfig. 5.3.

Subfig. 5.1: Real stops with line structures.

Subfig. 5.2: Synthetic stops after the first stop generation phase without line structures.

Subfig. 5.3: Synthetic stops after the second stop generation phase with line structures.

Fig. 5: Placement of train stops in Belgium, each dot represents one stop.

In this second stop generation phase, edges are modified so that sufficiently populated areas will be included in paths formed by edges, as illustrated by Fig. 6. Random edges will iteratively be selected, weighted by the edge length measured as Euclidian distance. (The Euclidian distance based on geographical coordinates is always used to calculate distances in this work.) On each edge, a random cell is selected weighed by the population value in the cell. Next, a weighed random point in a certain area around this point is selected. This selected point is marked as a stop, the original edge is removed and two new edges are added, marking the path between the two original edge nodes and the newly selected node.

Subfig. 6.1: Selecting a weighted random point on the edge.

Subfig. 6.2: Defining an area around the selected point.

Subfig. 6.3: Choosing a random point within the area, weighted by population value.

Subfig. 6.4: Modify edges so that the path includes this new point.

Fig. 6: Illustration of the second phase of stop generation where edges are modified to include sufficiently populated areas in paths.

Edges

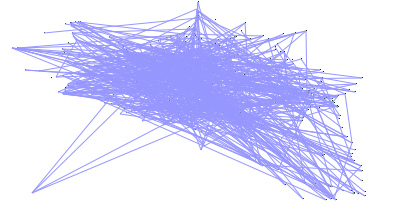

The next phase in public transit network generation connects stops that were generated in the previous phase with edges. In order to simulate real transit network structures, we split up this generation phase into three sequential steps. In the first step, clusters of nearby stops are formed, to lay the foundation for short-distance routes. Next, these local clusters are connected with each other, to be able to form long-distance routes. Finally, a cleanup step is in place to avoid abnormal edge structures in the network.

Short-distance The formation of clusters with nearby stations is done using agglomerative hierarchical clustering. Initially, each stop is part of a seperate cluster, where each cluster always maintains its centroid. The clustering step will iteratively try to merge two clusters with their centroid distance below a certain threshold. This threshold will increase for each iteration, until a maximum value is reached. The maximum distance value indicates the maximum inter-stop distance for forming local clusters. When merging two clusters, an edge is added between the closest stations from the respective clusters. The center location of the new cluster is also recalculated before the next iteration.

Long-distance At this stage, we have several clusters of nearby stops. Because all stops need to be reachable from all stops, these separate clusters also need to be connected. This problem is related to the domain of route planning over public transit networks, in which networks can be decomposed into smaller clusters of nearby stations to improve the efficiency of route planning. Each cluster contains one or more border stations [27], which are the only points through which routes can be formed between different clusters. We reuse this concept of border stations, by iteratively picking a random cluster, identifying its closest cluster based on the minimal possible stop distance, and connecting their border stations using a new edge. After that, the two clusters are merged. The iteration will halt when all clusters are merged and there is only one connected graph.

Cleanup The final cleanup step will make sure that the number of stops that are connected by only one edge are reduced. In real train networks, the majority of stations are connected with at least more than one other station. The two earlier generation steps however generate a significant number of loose stops, which are connected with only a single other stop with a direct edge. In this step, these loose stops are identified, and an attempt is made to connect them to other nearby stops as shown in Algorithm 1. For each loose stop, this is done by first identifying the direction of the single edge of the loose stop on line 18. This direction is scaled by the radius in which to look for stops, and defines the stepsize for the loop that starts on line 20. This loop starts from the loose stop and iteratively moves the search position in the defined direction, until it finds a random stop in the radius, or the search distance exceeds the average distance between the stops in the neighbourhood of this loose stop. This random stop from line 22 can be determined by finding all stations that have a distance to the search point that is below the radius, and picking a random stop from this collection. If such a stop is found, an edge is added from our loose stop to this stop.

FUNCTION RemoveLooseStops(S, E, N, O, r)INPUT:Set of stops SSet of edges E between the stops from SMaximum number N of closest stations to considerMaximum average distance O around a stop to be considereda loose stationRadius r in which to look for stops.FOREACH s in S with degree of 1 w.r.t. E DOsx = x coordinate of ssy = y coordinate of sC = N closest stations to s in S excluding sc = closest station to s in S excluding scx = x coordinate of ccy = y coordinate of ca = average distance between each pair of stops in CIF a <= O and C not empty THENdx= (sx - cx) * rdy= (sy - cy) * rox = sx; oy = syWHILE distance between o and s < a DOox += dx; oy += dys' = random station around o with radius a * rIF s' existsadd edge between s and s' to E and continuenext for-loop iteration

Algorithm 1: Reduce the number of loose stops by adding additional edges.

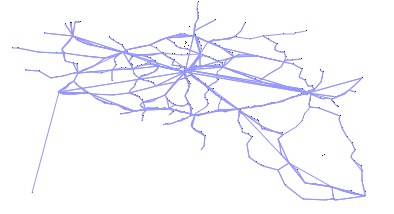

Fig. 7 shows an example of these three steps. After this phase, a network with stops and edges is available, and the actual transit planning can commence.

Subfig. 7.1: Formation of local clusters.

Subfig. 7.2: Connecting clusters through border stations.

Subfig. 7.3: Cleanup of loose stops.

Fig. 7: Example of the different steps in the edges generation algorithm.

Generator Objectives The main guaranteed objective of the edge generator is that the stops form a single connected transit network graph. This is to ensure that all stops in the network can be reached from any other stop using at least one path through the network.

Routes

Given a network of stops and edges, this phase generates routes over the network. This is done by creating short and long distance routes in two sequential steps.

Short-distance The goal of the first step is to create short routes where vehicles deliver each passed stop. This step makes sure that all edges are used in at least one route, this ensures that each stop can at least be reached from each other stop with one or more transfers to another line. The algorithm does this by first determining a subset of the largest stops in the network, based on the population value. The shortest path from each large stop to each other large stop through the network is determined. if this shortest path is shorter than a predetermined value in terms of the number of edges, then this path is stored as a route, in which all passed stops are considered as actual stops in the route. For each edge that has not yet been passed after this, a route is created by iteratively adding unpassed edges to the route that are connected to the edge until an edge is found that has already been passed.

Long-distance In the next step, longer routes are created, where the transport vehicle not necessarily halts at each passed stop. This is done by iteratively picking two stops from the list of largest stops using the network-based method [21] with each stop having an equal chance to be selected. A heuristical shortest path algorithm is used to determine a route between these stops. This algorithm searches for edges in the geographical direction of the target stop. This is done to limit the complexity of finding long paths through potentially large networks. A random amount of the largest stops on the path are selected, where the amount is a value between a minimum and maximum preconfigured route length. This iteration ends when a predetermined number of routes are generated.

Generator Objectives This algorithm takes into account the objectives of route design [19], as discussed in Section 2.2. More specifically, by first focusing on the largest stops, a minimal level of area coverage and demand satisfaction is achieved, because the largest stops correspond to highly populated areas, which therefore satisfies at least a large part of the population. By determining the shortest path between these largest stops, the route and trip directness between these stops is optimal. Finally, by not instantiating all possible routes over the network, the total route length is limited to a reasonable level.

Trips

A time-agnostic transit network with routes has been generated in the previous steps. In this final phase, we temporally instantiate routes by first determining starting times for trips, after which the following stop times can be calculated based on route distances. Instead of generating explicit timetables, as is done in typical transit scheduling methodologies, we create fictional rides of vehicles. In order to achieve realistic trip times, we approximate real trip time distributions, with the possibility to encounter delays.

As mentioned before in Section 2.2, each consecutive pair of start and stop time in a trip over an edge corresponds to a connection. A connection can therefore be represented as a pair of timestamps, a link to the edge representing the departure and arrival stop, a link to the trip it is part of, and its index within this trip.

Trip Starting Times The trips generator iteratively creates new connections until a predefined number is reached. For each connection, a random route is selected with a larger chance of picking a long route. Next, a random start time of the connection is determined. This is done by first picking a random day within a certain range. After that, a random hour of the day is determined using a preconfigured distribution. This distribution is derived from the public logs of iRail, a route planning API in Belgium [28]. A seperate hourly distribution is used for weekdays and weekends, which is chosen depending on the random day that was determined.

Stop Times Once the route and the starting time have been determined, different stop times across the trip can be calculated. For this, we take into account the following factors:

- Maximum vehicle speed , preconfigured constant.

- Vehicle acceleration , preconfigured constant.

- Connection distance , Euclidian distance between stops in network.

- Stop size , derived from population value.

For each connection in the trip, the time it takes for a vehicle to move between the two stops over a certain distance is calculated using the formula in Equation 3. Equation 1 calculates the required time to reach maximum speed and Equation 2 calculates the required distance to reach maximum speed. This formula simulates the vehicle speeding up until its maximum speed, and slowing down again until it reaches its destination. When the distance is too short, the vehicle will not reach its maximum speed, and just speeds up as long as possible until is has to slow down again to stop in time.

Equation 1: Time to reach maximum speed.

Equation 2: Distance to reach maximum speed.

Equation 3: Duration for a vehicle to move between two stops.

Not only the connection duration, but also the waiting times of the vehicle at each stop are important for determining the stop times. These are calculated as a constant minimum waiting time together with a waiting time that increases for larger stop sizes , this increase is determined by a predefined growth factor.

Delays Finally, each connection in the trip will have a certain chance to encounter a delay. When a delay is applicable, a delay value is randomly chosen within a certain range. Next to this, also a cause of the delay is determined from a preconfigured list. These causes are based on the Traffic Element Events from the Transport Disruption ontology, which contains a number of events that are not planned by the network operator such as strikes, bad weather or animal collisions. Different types of delays can have a different impact factor of the delay value, for instance, simple delays caused by rush hour would have a lower impact factor than a major train defect. Delays are carried over to next connections in the trip, with again a chance of encountering additional delay. Furthermore, these delay values can also be reduced when carried over to the next connection by a certain predetermined factor, which simulates the attempt to reduce delays by letting vehicles drive faster.

Generator Objectives For trip generation, we take into account several objectives from the setting of frequencies and timetabling from transit planning [19]. By instantiating more long distance routes, we aim to increase demand satisfaction as much as possible, because these routes deliver busy and populated areas, and the goal is to deliver these more frequently. Furthermore, by taking into account realistic time distributions for trip instantiation, we also adhere to this objective. Secondly, by ensuring waiting times at each stop that are longer for larger stations, the transfer coordination objective is taken into account to some extent.

Implementation

In this section, we discuss the implementation details of PoDiGG, based on the generator algorithm introduced in Section 2.5. PoDiGG is split up into two parts: the main PoDiGG generator, which outputs GTFS data, and PoDiGG-LC, which depends on the main generator to output RDF data. Serialization in RDF using existing ontologies, such as the GTFS and Linked Connections ontologies, allows this inherently linked data to be used within RDF data management systems, where it can for instance be used for benchmarking purposes. Providing output in GTFS will allow this data to be used directly within all systems that are able to handle transit feeds, such as route planning systems. The two generator parts will be explained hereafter, followed by a section on how the generator can be configured using various parameters.

PoDiGG

The main requirement of our system is the ability to generate realistic public transport datasets using the mimicking algorithm that was introduced in Section 2.5. This means that given a population distribution of a certain region, the system must be able to design a network of routes, and determine timely trips over this network.

PoDiGG is implemented to achieve this goal. It is written in JavaScript using Node.js, and is available under an open license on GitHub. In order to make installation and usage more convenient, PoDiGG is available as a Node module on the NPM package manager and as a Docker image on Docker Hub to easily run on any platform. Every sub-generator that was explained in Section 2.5, is implemented as a separate module. This makes PoDiGG highly modifiable and composable, because different implementations of sub-generators can easily be added and removed. Furthermore, this flexible composition makes it possible to use real data instead of certain sub-generators. This can be useful for instance when a certain public transport network is already available, and only the trips and connections need to be generated.

We designed PoDiGG to be highly configurable to adjust the characteristics of the generated output across different levels, and to define a certain seed parameter for producing deterministic output.

All sub-generators store generated data in-memory, using list-based data structures directly corresponding to the GTFS format.

This makes GTFS serialization a simple and efficient process.

Table 1 shows the GTFS files that are generated by the different PoDiGG modules.

This table does not contain references to the region and edges generator,

because they are only used internally as prerequisites to the later steps.

All required files are created to have a valid GTFS dataset.

Next to that, the optional file for exceptional service dates is created.

Furthermore, delays.txt is created, which is not part of the GTFS specification.

It is an extension we provide in order to serialize delay information about each connection in a trip.

These delays are represented in a CSV file containing columns for referring to a connection in a trip,

and contains delay values in milliseconds and a certain reason per connection arrival and departure,

as shown in Listing 2.

| File | Generator |

|---|---|

agency.txt |

Constant |

stops.txt |

Stops |

routes.txt |

Routes |

trips.txt |

Trips |

stop_times.txt |

Trips |

calendar.txt |

Trips |

calendar_dates.txt |

Trips |

delays.txt |

Trips |

Table 1: The GTFS files that are written by PoDiGG, with their corresponding sub-generators that are responsible for generating the required data. The files in bold refer to files that are required by the GTFS specification.

trip_id,stop,delay_dep,delay_arr,delay_dep_reason,delay_arr_reason100_4 ,0 ,0 ,1405754 , ,td:RepairWork100_6 ,0 ,0 ,1751671 , ,td:BrokenTrain100_6 ,1 ,1751671 ,1553820 ,td:BrokenTrain ,td:BrokenTrain100_7 ,0 ,2782295 ,0 ,td:TreeWork ,

Listing 2: Sample of a delays.txt file in a GTFS dataset.

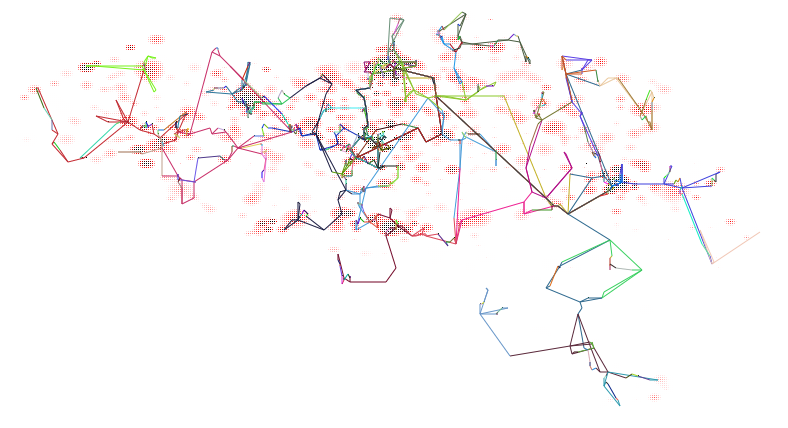

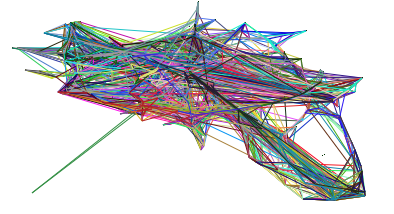

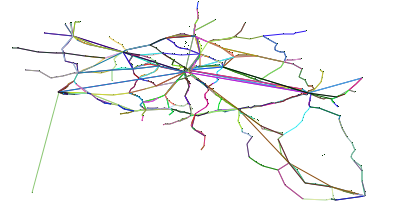

In order to easily observe the network structure in the generated datasets, PoDiGG will always produce a figure accompanying the GTFS dataset. Fig. 8 shows an example of such a visualization.

Fig. 8: Visualization of a generated public transport network based on Belgium’s population distribution. Each route has a different color, and dark route colors indicate more frequent trips over them than light colors. The population distribution is illustrated for each cell as a scale going from white (low), to red (medium) and black (high). Full image

Because the generation of large datasets can take a long time depending on the used parameters, PoDiGG has a logging mechanism, which provides continuous feedback to the user about the current status and progress of the generator.

Finally, PoDiGG provides the option to derive realistic public transit queries over the generated network, aimed at testing the load of route planning systems. This is done by iteratively selecting two random stops weighed by their size and choosing a random starting time based on the same time distribution as discussed in Subsection 2.5.5. This is serialized to a JSON format that was introduced for benchmarking the Linked Connections route planner [11].

PoDiGG-LC

PoDiGG-LC is an extension of PoDiGG, that outputs data in Turtle/RDF using the ontologies shown in Fig. 3. It is also implemented in JavaScript using Node.js, and available under an open license on GitHub. PoDiGG-LC is also available as a Node module on NPM and as a Docker image on Docker Hub. For this, we extended the GTFS-LC tool that is able to convert GTFS datasets to RDF using the Linked Connections and GTFS ontologies. The original tool serializes a minimal subset of the GTFS data, aimed at being used for Linked Connections route planning over connections. Our extension also serializes trip, station and route instances, with their relevant interlinking. Furthermore, our GTFS extension for representing delays is also supported, and is serialized using a new Linked Connections Delay ontology that we created.

Configuration

PoDiGG accepts a wide range of parameters that can be used to configure properties of the different sub-generators. Table 2 shows an overview of the parameters, grouped by each sub-generator. PoDiGG and PoDiGG-LC accept these parameters either in a JSON configuration file or via environment variables. Both PoDiGG and PoDiGG-LC produce deterministic output for identical sets of parameters, so that datasets can easily be reproduced given the configuration. The seed parameter can be used to introduce pseudo-randomness into the output.

| Name | Default Value | Description | |

|---|---|---|---|

seed |

1 |

The random seed | |

| Region | region_generator |

isolated |

Name of a region generator. (isolated, noisy or region) |

lat_offset |

0 |

Value to add with all generated latitudes | |

lon_offset |

0 |

Value to add with all generated longitudes | |

cells_per_latlon |

100 |

How many cells go in 1 latitude/longitude | |

| Stops | stops |

600 |

How many stops should be generated |

min_station_size |

0.01 |

Minimum cell population value for a stop to form | |

max_station_size |

30 |

Maximum cell population value for a stop to form | |

start_stop_choice_power |

4 |

Power for selecting large population cells as stops | |

min_interstop_distance |

1 |

Minimum distance between stops in number of cells | |

factor_stops_post_edges |

0.66 |

Factor of stops to generate after edges | |

edge_choice_power |

2 |

Power for selecting longer edges to generate stops on | |

stop_around_edge_choice_power |

4 |

Power for selecting large population cells around edges | |

stop_around_edge_radius |

2 |

Radius in number of cells around an edge to select points | |

| Edges | max_intracluster_distance |

100 |

Maximum distance between stops in one cluster |

max_intracluster_distance_growthfactor |

0.1 |

Power for clustering with more distant stops | |

post_cluster_max_intracluster_distancefactor |

1.5 |

Power for connecting a stop with multiple stops | |

loosestations_neighbourcount |

3 |

Neighbours around a loose station that should define its area | |

loosestations_max_range_factor |

0.3 |

Maximum loose station range relative to the total region size | |

loosestations_max_iterations |

10 |

Maximum iteration number to try to connect one loose station | |

loosestations_search_radius_factor |

0.5 |

Loose station neighbourhood size factor | |

| Routes | routes |

1000 |

The number of routes to generate |

largest_stations_fraction |

0.05 |

The fraction of stops to form routes between | |

penalize_station_size_area |

10 |

The area in which stop sizes should be penalized | |

max_route_length |

10 |

Maximum number of edges for a route in the macro-step | |

min_route_length |

4 |

Minimum number of edges for a route in the macro-step | |

| Connections | time_initial |

0 |

The initial timestamp (ms) |

time_final |

24 * 3600000 |

The final timestamp (ms) | |

connections |

30000 |

Number of connections to generate | |

stop_wait_min |

60000 |

Minimum waiting time per stop | |

stop_wait_size_factor |

60000 |

Waiting time to add multiplied by station size | |

route_choice_power |

2 |

Power for selecting longer routes for connections | |

vehicle_max_speed |

160 |

Maximum speed of a vehicle in km/h | |

vehicle_speedup |

1000 |

Vehicle speedup in km/(h2) | |

hourly_weekday_distribution |

...1 |

Hourly connection chances for weekdays | |

hourly_weekend_distribution |

...1 |

Hourly connection chances for weekend days | |

delay_chance |

0 |

Chance for a connection delay | |

delay_max |

3600000 |

Maximum delay | |

delay_choice_power |

1 |

Power for selecting larger delays | |

delay_reasons |

...2 |

Default reasons and chances for delays | |

delay_reduction_duration_fraction |

0.1 |

Maximum part of connection duration to subtract for delays | |

| Queryset | start_stop_choice_power |

4 |

Power for selecting large starting stations |

query_count |

100 |

The number of queries to generate | |

time_initial |

0 |

The initial timestamp | |

time_final |

24 * 3600000 |

The final timestamp | |

max_time_before_departure |

3600000 |

Minimum number of edges for a route in the macro-step | |

hourly_weekday_distribution |

...1 |

Chance for each hour to have a connection on a weekday | |

hourly_weekend_distribution |

...1 |

Chance for each hour to have a connection on a weekend day |

Table 2: Configuration parameters for the different sub-generators. Time values are represented in milliseconds. 1 Time distributions are based on public route planning logs [28]. 2 Default delays are based on the Transport Disruption ontology.

Evaluation

In this section, we discuss our evaluation of PoDiGG. We first evaluate the realism of the generated datasets using a constant seed by comparing its coherence to real datasets, followed by a more detailed realism evaluation of each sub-generator using distance functions. Finally, we provide an indicative efficiency and scalability evaluation of the generator and discuss practical dataset sizes. All scripts that were used for the following evaluation can be found on GitHub. Our experiments were executed on a 64-bit Ubuntu 14.04 machine with 128 GB of memory and a 24-core 2.40 GHz CPU.

Coherence

Metric

In order to determine how closely synthetic RDF datasets resemble their real-world variants in terms of structuredness, the coherence metric [10] can be used. In RDF dataset generation, the goal is to reach a level of structuredness similar to real datasets. As mentioned before in Section 2.2, many synthetic datasets have a level of structuredness that is higher than their real-world counterparts. Therefore, our coherence evaluation should indicate that our generator is not subject to the same problem. We have implemented a command-line tool to calculate the coherence value for any given input dataset.

Results

When measuring the coherence of the Belgian railway, buses and Dutch railway datasets, we discover high values for both the real-world datasets and the synthetic datasets, as can be seen in Table 3. These nearly maximal values indicate that there is a very high level of structuredness in these real-world datasets. Most instances have all the possible values, unlike most typical RDF datasets, which have values around or below 0.6 [10]. That is because of the very specialized nature of this dataset, and the fact that they originate from GTFS datasets that have the characteristics of relational databases. Only a very limited number of classes and predicates are used, where almost all instances have the same set of attributes. In fact, these very high coherence values for real-world datasets simplify the process of synthetic dataset generation, as less attention needs to be given to factors that lead to lower levels of structuredness, such as optional attributes for instances. When generating synthetic datasets using PoDiGG with the same number of stops, routes and connections for the three gold standards, we measure very similar coherence values, with differences ranging from 0.08% to 1.64%. This confirms that PoDiGG is able to create datasets with the same high level of structuredness to real datasets of these types, as it inherits the relational database characteristics from its GTFS-centric mimicking algorithm.

| Belgian railway | Belgian buses | Dutch railway | |

|---|---|---|---|

| Real | 0.9845 | 0.9969 | 0.9862 |

| Synthetic | 0.9879 | 0.9805 | 0.9870 |

| Difference | 0.0034 | 0.0164 | 0.0008 |

Table 3: Coherence values for three gold standards compared to the values for equivalent synthetic variants.

Distance to Gold Standards

While the coherence metric is useful to compare the level of structuredness between datasets, it does not give any detailed information about how real synthetic datasets are in terms of their distance to the real datasets. In this case, we are working with public transit feeds with a known structure, so we can look at the different datasets aspects in more detail. More specifically, we start from real geographical areas with their population distributions, and consider the distance functions between stops, edges, routes and trips for the synthetic and gold standard datasets. In order to check the applicability of PoDiGG to different transport types and geographical areas, we compare with the gold standard data of the Belgian railway, the Belgian buses and the Dutch railway. The scripts that were used to derive these gold standards from real-world data can be found on GitHub.

In order to construct distance functions for the different generator elements, we consider several helper functions. The function in Equation 4 is used to determine the closest element in a set of elements to a given element , given a distance function . The function in Equation 5 calculates the distance between all elements in and all elements in , given a distance function . The computational complexity of is , where is the cost for one distance calculation for . The complexity of then becomes .

Equation 4: Function to determine the closest element in a set of elements.

Equation 5: Function to calculate the distance between all elements in a set of elements.

Stops Distance

For measuring the distance between two sets of stops and , we introduce the distance function from Equation 6. This measures the distance between every possible pair of stops using the Euclidean distance function . Assuming a constant execution time for , the computational complexity for is .

Equation 6: Function to calculate the distance between two sets of stops.

Edges Distance

In order to measure the distance between two sets of edges and , we use the distance function from Equation 7, which measures the distance between all pairs of edges using the distance function . This distance function , which is introduced in Equation 8, measures the Euclidean distance between the start and endpoints of each edge, and between the different edges, weighed by the length of the edges. The constant in Equation 8 is to ensure that the distance between two edges that have an equal length, but exist at a different position, is not necessarily zero. The computational cost of can be considered as a constant, so the complexity of becomes .

Equation 7: Function to calculate the distance between two sets of edges.

Equation 8: Function to calculate the distance between two edges.

Routes Distance

Similarly, the distance between two sets of routes and is measured in Equation 9 by applying for the distance function . Equation 10 introduces this distance function between two routes, which is calculated by considering the edges in each route and measuring the distance between those two sets using the distance function from Equation 7. By considering the maximum amount of edges per route as , the complexity of becomes This leads to a complexity of for .